Deploying Large Language Models (LLMs) on Alibaba Cloud PAI

The blog explores how to deploy large language models (LLMs) on Alibaba Cloud’s PAI platform, highlighting its scalable infrastructure and integrated AI development tools. It walks through model training, inference, and optimization using PAI’s Machine Learning Studio and Elastic Algorithm Service. The post emphasizes ease of deployment, GPU acceleration, and support for open-source frameworks like PyTorch and TensorFlow. Real-world use cases include intelligent customer service, financial forecasting, and personalized content generation.

ALIBABA CLOUDAIML

Abhishek Gupta

9/4/20259 min read

The surge in interest and adoption of large language models (LLMs) such as GPT, Qwen, Llama, and others has accelerated the need for scalable, efficient, and easily manageable AI infrastructure. Alibaba Cloud Platform for AI (PAI) provides an integrated, enterprise-grade environment for developing, deploying, and maintaining LLMs across training and inference lifecycles. With its modular platform encompassing data processing, visual model building, distributed training, and advanced inference, PAI supports both out-of-the-box and custom LLM deployments, while enabling seamless integration with other Alibaba Cloud offerings like OSS, MaxCompute, and VPC.

Alibaba Cloud PAI Platform Overview

Alibaba Cloud’s Platform for AI (PAI) is a full-stack AI platform offering end-to-end support from data annotation to model deployment. Its platform is modular and encompasses the following main elements:

PAI Workspaces: Logical environments for resource grouping, permission management, and collaborative AI development.

Machine Learning Designer: A drag-and-drop, visual modeling platform equipped with 140+ advanced AI components, templates for NLP and LLMs, and support for both code-free and code-based workflows.

PAI Deep Learning Containers (DLC): For scalable, distributed training jobs across CPUs and GPUs.

Data Science Workshop (DSW): Managed notebook environments for code-centric development.

Elastic Algorithm Service (EAS): The managed, elastic inference serving platform for deploying models—including LLMs—with minimal configuration.

Model Gallery: A catalog of pre-trained, community, and Alibaba-developed models for one-click deployment or fine-tuning.

PAI-EAS stands out by offering one-click LLM deployment (public models or custom), with deep integration of high-performance inference engines (BladeLLM and vLLM), intelligent routing, seamless networking, and unified OpenAI-compatible APIs.

Billing and scaling options include pay-as-you-go, subscriptions, resource plans for bulk discounts, and auto-scaling per inference duration for burst workloads.

Infrastructure Setup for LLM Deployment on Alibaba Cloud

1. Resource Planning and Workspace Setup

Workspaces allow for centralized management of compute resources, user permissions, and AI development tools. Upon entering a workspace in the PAI console, users can:

View and assign resources such as GPUs, CPUs, storage (OSS/NAS), and MaxCompute for big data analytics.

Configure resource quotas and user roles (RAM-based control for administrators, algorithm developers, etc.).

Mount OSS buckets, configure event notifications (via EventBridge), and storage paths for managing transient and persistent data.

Choose resource types for EAS: public resource group (fast onset, shared cost), dedicated or virtual resource groups for high security/performance/isolation needs.

Resource configurations for deployment include public, dedicated, or virtual groups—with GPU and memory requirements matching the chosen LLM (e.g., Qwen-7B, Llama2-13B, etc.).

Best Practice:

For efficient deployment, use public resource groups for experimentation and dedicated, pre-paid virtual groups for production to ensure consistent performance and security.

2. Storage and Networking

OSS (Object Storage Service): Used for storing training data, serving models, configuration files, and business assets. Multiple mounting methods are supported (OSSFS, JindoFuse, OSS Connector for AI/ML) to enable both sequential streaming and high throughput random reads (critical for LLM training/inference).

VPC (Virtual Private Cloud): For internal traffic, multi-service integration, network isolation, and security. VPC configuration is crucial for compliance in production environments and private API exposure.

MaxCompute: Used for big data preprocessing and analytics—data flows easily from MaxCompute to training pipelines and can be combined with PAI pipelines for complex data engineering tasks.

PAI Workspaces and Resource Configuration

Workspaces enable fine-grained resource control and role-based access management. Key elements include:

Compute Types:

Intelligent Computing Lingjun: High-performance resource pools for model scaling and RDMA networking.

General Compute: For development, testing, and less resource-intensive jobs.

ACS Resources: Designed for EAS/DLC inference or scheduling jobs.

MaxCompute: CPU resources for Designer or data analytics.

Default Storage: Each workspace can have a default OSS bucket for intermediate and final data storage (critical for pipeline consistency and data lineage).

Roles & Notifications: Assign roles (admin, dev, tester) mapped to corporate policy; set up event-based notifications for job failures, successes, and log monitoring (integrated with DingTalk, Email, etc.).

Resource Monitoring: Real-time usage reporting (CPU/GPU/memory), auto-scaling, and high-availability options.

Model Training Workflows with PAI Designer

PAI Designer facilitates end-to-end LLM workflows:

Data Processing:

Complex data cleaning is achieved via pipelines—masking sensitive data, normalizing text, language recognition, N-gram/length/perplexity filtering, and deduplication.

Designer offers templates specifically for Wikipedia, arXiv, SFT, and domain-specific data, promoting best practices in curation and privacy.

Model Training:

Models such as Qwen, Llama, and Baichuan can be trained or fine-tuned (using adapters such as QLoRA, LoRA, full-parameter fine-tuning).

Visual pipeline interfaces abstract underlying Deep Learning Container orchestration—set parameters such as learning rate, batch size, pre-trained checkpoint selection, and distributed training configuration in a few clicks.

Built-in support for multi-model, concurrent comparison of training outcomes (A/B evaluation, benchmarking).

Offline and Batch Inference:

Pipeline includes offline inference nodes, automatically exporting results to OSS.

After inference, Developer can right-click on nodes to view, download, or evaluate results.

Multi-model Comparison:

Same data can be used to train, fine-tune, and test several models in parallel (e.g., Qwen-7B vs. Llama2-7B) for head-to-head evaluation.

Model Inference Deployment Using PAI-EAS

PAI-EAS (Elastic Algorithm Service) is the central entry point for LLM inference services:

One-Click Deployment:

Log on to PAI console.

Select region/workspace, enter EAS portal, deploy via "LLM Deployment".

Choose public models (e.g., Qwen3-8B, DeepSeek, Llama2, Baichuan, ChatGLM) or custom OSS-mounted models.

Select inference engine (BladeLLM or vLLM) and deployment template (standalone or distributed).

Wait ~5 minutes for deployment with automatic instance, GPU, and image configuration.

Resource Recommendation:

For models like Qwen-1.8B up to Qwen-72B, the platform suggests configurations from a single GU30/A10 for smaller models to 8x V100 or 2x A100 for ultra-large models.

Batch and scale-out options are available for higher throughput scenarios.

Parameterization:

Fine-tune settings (max_tokens, temperature, top_k, top_p, etc.) via API or web interface.

Adjust ‘run command’ to specify backend (e.g., --backend=vllm) for acceleration.

Debugging & API Exposure:

EAS provides online debugging tools on the console—interact with the deployed model, test inference, and obtain callable endpoints and tokens for use in downstream applications.

WebUI Access:

Direct interface for users to interact dynamically with LLM, useful for business users or QA.

Common Deployment and Inference Workflow

Acceleration is enabled by appending --backend=vllm in the run command or selecting via the deployment console.

Integration with Other Alibaba Cloud Services

Modern AI solutions increasingly rely on multi-service cloud integration. Alibaba Cloud PAI natively connects to:

OSS (Object Storage Service)

Primary repository for datasets, model checkpoints, and user business data.

Multiple integration options support high-speed sequential and random access:

JindoFuse: For local-style direct mounting.

ossfs 2.0: High-performance, ideal for large file streaming and concurrent access.

OSS Connector for AI/ML: PyTorch support, high throughput ingestion for model training.

MaxCompute

Big data platform for preprocessing, storing, and managing large-scale tabular/textual data.

Accessible within PAI pipelines and via SDKs (pyodps) for advanced AI analytics, query, and ETL.

VPC (Virtual Private Cloud)

Enables secure, internal network communication between AI components, API endpoints, and storage without exposure to public internet.

Configurable in the EAS and workspace setup for both inbound/outbound network rules, efficient resource access, and private API deployment.

Additional: AnalyticDB for PostgreSQL, Simple Log Service (SLS), and event-triggered automation are also commonly integrated for vector storage, logging, and workflow orchestration.

LangChain Integration with Alibaba Cloud PAI

LangChain is a leading Python framework for rapidly building LLM-powered applications, especially retrieval-augmented generation (RAG) workflows. PAI-EAS’ OpenAI-compatible endpoints allow seamless integration with LangChain as both input LLM and backend knowledge retriever.

Modes of Use

Native Integration:

Use the official PaiEasEndpoint LangChain class or the community pai_eas_endpoint plugin.

Credentials are set via the environment variables EAS_SERVICE_URL and EAS_SERVICE_TOKEN.

Instantiated in Python as:

from langchain_community.llms.pai_eas_endpoint import PaiEasEndpoint

llm = PaiEasEndpoint(eas_service_url="...", eas_service_token="...")

Prompt Chaining and Retrieval:

Combine with LangChain’s chaining classes, memory, and agent abstractions.

Full support for streaming, context injection, custom prompts, and business logic workflows.

Knowledge Base (RAG) Workflows:

Upload and vectorize business documents, integrate with local vector stores using LangChain connectors on the PAI WebUI.

Support file formats including .txt, .md, .docx, .pdf.

Example:

PAI-EAS can be called as an OpenAI endpoint (e.g., via PaiEasChatEndpoint in LangChain) with custom temperature, top-k, and streaming/async support. This makes it simple to build retrieval, summarization, multi-step conversational, or generative AI workflows.

Step-by-Step Lab Environment Setup Guide

This section provides a comprehensive, hands-on recipe for deploying LLMs on Alibaba Cloud PAI, focusing on practical reproducibility.

Prerequisites

Alibaba Cloud account with PAI subscription and OSS bucket.

Sufficient Cloud credit/quota to provision required GPU resources.

(Optional) Enable OpenAI-compatible tooling for API or RAG integration (like LangChain, Dify, Cherry Studio).

Step 1: Activate and Prepare PAI Workspace

Log in to Alibaba Cloud.

Go to PAI Console → Create or select a workspace.

Configure:

Default OSS bucket for data/model storage.

Assign RAM roles with AliyunPAIFullAccess.

Associate required GPU/CPU/General Computing resources.

Set up members and notification/event rules as desired.

Step 2: Prepare Storage and Data (OSS/MaxCompute)

Upload datasets, model checkpoints, and business files to the OSS bucket.

For advanced/preprocessed data (e.g., custom tables or features), prepare data in MaxCompute and script access permissions.

Step 3: (Optional) Model Training with PAI Designer

In PAI Console, go to "Workspaces", select your workspace.

Under “Model Development”, enter "Visual Modeling (Designer)".

Use LLM templates for data processing, fine-tuning (e.g., QLoRA, LoRA), and batch inference.

Specify pipeline data storage paths, training parameters, and select model(s) to run.

Step 4: Model Deployment via EAS (Elastic Algorithm Service)

Public Model Deployment (easiest):

Go to EAS portal: Model Deployment → Model Online Services.

Click "Deploy Service".

Select deployment method:

"Deploy Web App using Image".

Choose chat-llm-webui image (latest version).

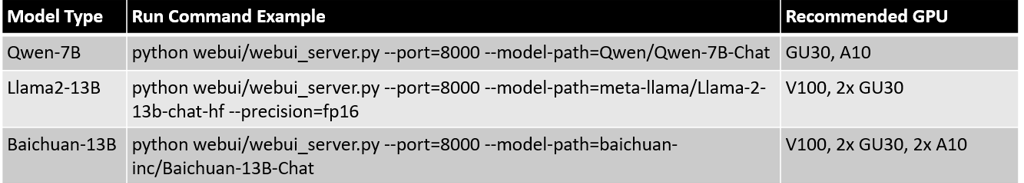

Set run command:

python webui/webui_server.py --port=8000 --model-path=Qwen/Qwen-7B-Chat [--backend=vllm]Define HTTP port (e.g., 8000).

Set resource type/group and instance specs (e.g., ml.gu7i.c16m60.1-gu30 for 7B models).

Click "Deploy".

Custom Model Deployment:

Upload your Huggingface model and config (including config.json) to OSS.

In deployment service, mount OSS path and set run command (e.g., --model-path=/data if mounting to /data).

Update --backend for selected acceleration engine.

Step 5: Testing and API Integration

WebUI Testing:

Use built-in WebUI to send test queries or validate business-specific tasks.

API Testing:

Retrieve endpoint URL and token from “Overview” in EAS service details.

Test using Python requests, HTTP via curl, or compatible OpenAI SDK (Python, JS, etc.).

Sample call (Python, OpenAI SDK with vLLM):

from openai import OpenAI

client = OpenAI(api_key="<EAS_TOKEN>", base_url="<EAS_ENDPOINT>/v1")

models = client.models.list()

chat_completion = client.chat.completions.create(

messages=[

{"role": "system", "content": "..."},

{"role": "user", "content": "hello"}

],

model="your-model-id",

max_completion_tokens=1024,

stream=True,

)

for chunk in chat_completion:

print(chunk.choices[0].delta.content, end="")

LangChain Integration:

Install langchain-community package

Set EAS_SERVICE_URL and EAS_SERVICE_TOKEN as environment variables.

Use PaiEasEndpoint and LLMChain for prompt chaining/inference logic.

Step 6: (Optional) RAG/LangChain Knowledge Base Integration

In WebUI, activate LangChain, upload business files (txt, PDF, Markdown, etc.), and vectorize.

For RAG services, configure PAI-RAG or AnalyticDB vector store, connect via OpenAI API compatibility.

Step 7: Monitoring, Scaling, and Advanced Configuration

Monitor resource consumption (GPU/CPU/Memory) and logs from EAS and Simple Log Service.

Tune concurrency and batch settings via BladeLLM/vLLM and resource group policies.

Use advanced deployment options for distributed inference of ultra-large models.

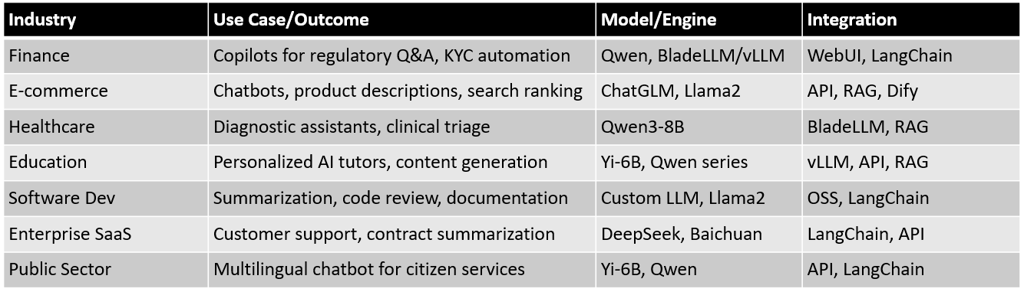

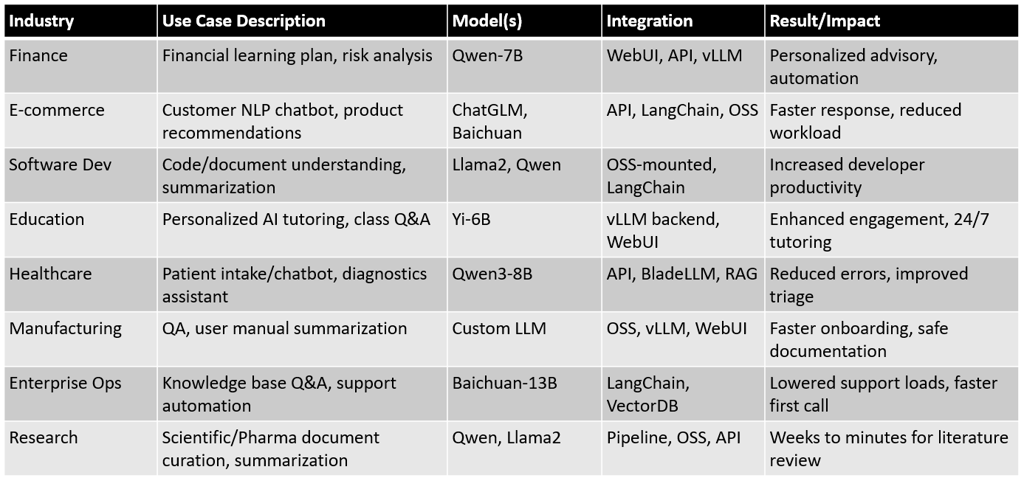

Sample Table: LLM Industrial Use Cases on Alibaba Cloud PAI

Explanation:

Each model and hardware pair is designed for optimal fetch, minimizing cost for the required throughput and latency. The flexibility in “run command” allows users to switch LLMs rapidly by simply toggling model paths, making the infrastructure highly reusable for A/B benchmarking and model selection.

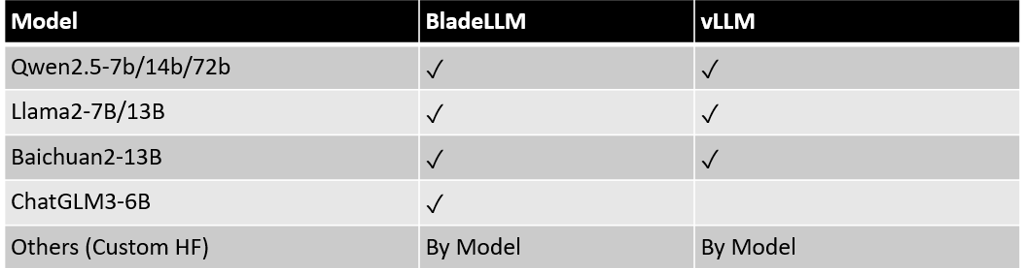

Inference Acceleration Engines: BladeLLM and vLLM on PAI

The performance and cost of LLM inference are dictated by the efficiency of back-end inference engines. PAI-EAS uniquely supports both BladeLLM and vLLM for low-latency, high-throughput inference.

BladeLLM

A proprietary inference engine developed by Alibaba Cloud, optimized for LLM serving on heterogeneous GPU clusters.

Key features:

Custom operator library (BlaDNN) and AI compiler-based FlashNN for kernel-level performance.

Supports model quantization (GPTQ, AWQ, SmoothQuant) and advanced memory management.

Distributed inference with tensor and pipeline parallelism, allowing ultra-large models to be served across multiple GPUs or nodes(context windows up to 280K tokens in fp16 and kv cache).

Continuous batching and asynchronous runtime boost both token and throughput (QPS) by 2–3x over open-source baselines.

Speculative decoding and prompt caching for rapid response to repeated/related queries.

Ease of Use:

Pre-packaged deployment images; models in Huggingface format can be mounted via OSS.

Simple configuration via WebUI or REST API, with OpenAI-compatible protocols.

vLLM

Open-source, highly scalable inference engine supporting distributed tensor and pipeline parallelism, topology-aware memory allocation, and advanced scheduling.

Key features:

Efficient kv cache management for handling requests with ultra-long contexts and hundreds of concurrent users.

Compatible with Ray/Kubernetes clusters; can run across single/multinode and single/multigpu.

OpenAI API compatibility, making vLLM plug-and-play for workflows expecting standard OpenAI endpoints (including LangChain and RAG platforms).

Engine Selection:

Alibaba Cloud PAI provides a comprehensive, cutting-edge, and enterprise-ready platform for LLM deployment that covers every stage of the model lifecycle—from data processing and training to real-time inference serving and application integration. Features such as pay-as-you-go scaling, high-performance inference (BladeLLM, vLLM), OpenAI-compatible APIs, and native integrations with LangChain, OSS, and MaxCompute empower organizations to operationalize AI for diverse industrial scenarios, reduce complexity, ensure data security, and accelerate innovation.

Full workflow sample implementations, code templates, and OpenAI-compatible endpoints facilitate replication and integration across various platforms and use cases.

API Integration and OpenAI Compatibility

One of PAI-EAS’ defining features is compatibility with the OpenAI API protocol:

REST API: Standard endpoints /v1/chat/completions, /v1/models, etc.

WebSocket/SSE: Built-in support for conversational/multi-turn or streaming outputs.

Client Compatibility: Works directly with the official OpenAI SDKs (Python, Node.js, etc.), Dify, Cherry Studio, and Chatbox.

Parameter Tuning: Expose temperature, top-p, max tokens, etc., in inference requests.

Security: Token-based authentication is required for all calls.

This compatibility enables zero-code integration with downstream AI chains, RAG services, and virtually all popular AI front-ends and orchestration tools.

Real-World Industrial Use Cases of LLMs on PAI

Alibaba Cloud PAI is used in diverse verticals for impact at scale: