Alibaba Cloud Qwen 3: Redefining Open-Source AI with Hybrid Reasoning

Alibaba Cloud’s Qwen 3 is a next-generation open-source large language model that introduces hybrid reasoning modes—Thinking and Non-Thinking—for both deep analysis and fast responses. Built on a Mixture-of-Experts (MoE) architecture, it activates only a fraction of its parameters per task, optimizing performance and efficiency. Supporting 119 languages, Qwen 3 excels in multilingual tasks, code generation, and logic reasoning, outperforming many top-tier models in key benchmarks. With variants ranging from 0.6B to 235B parameters and support for up to 128K context tokens, it’s adaptable across industries like education, enterprise AI, and robotics. Open-sourced under Apache 2.0, Qwen 3 is designed for scalable deployment and fine-tuning on platforms like Hugging Face and Alibaba’s PAI.

ALIBABA CLOUDGENERATIVE AIAIML

Abhishek Gupta

5/19/20252 min read

Alibaba Cloud’s Qwen 3 is a groundbreaking open-source large language model that combines hybrid reasoning, multilingual fluency, and scalable architecture. It features dual reasoning modes—instant and deep—allowing it to handle both quick responses and complex tasks like coding and math. Built on a Mixture-of-Experts framework, it delivers high performance with lower compute costs. Trained on 36 trillion tokens across 119 languages, Qwen 3 is designed for global collaboration and commercial use. It rivals top models like GPT-4 and Gemini, and is already being applied in healthcare, finance, education, and enterprise AI.

In April 2025, Alibaba Cloud unveiled Qwen 3, the third-generation large language model (LLM) in its Qwen series. With a bold leap in architecture, multilingual capabilities, and hybrid reasoning, Qwen 3 is setting new benchmarks in the open-source AI landscape.

🧠 What’s New in Qwen 3?

Qwen 3 introduces several groundbreaking innovations:

🔄 Hybrid Reasoning Modes

Thinking Mode: Enables deep, multi-step reasoning for tasks like math, logic, and code generation.

Non-Thinking Mode: Offers fast, concise responses for general-purpose queries.

Dynamic Switching: Developers can toggle modes or let the model decide based on task complexity.

🧩 Mixture-of-Experts (MoE) Architecture

Sparse Activation: Only a subset of parameters (e.g., 22B out of 235B) are active per token.

Efficiency: Reduces compute cost while maintaining high performance.

Scalability: Ideal for deployment on limited hardware.

🌍 Multilingual Mastery

Supports 119 languages and dialects.

Excels in translation, multilingual instruction-following, and cross-lingual reasoning.

🧰 Advanced Agent Capabilities

Native support for Model Context Protocol (MCP).

Robust function-calling and tool use for agent-based workflows.

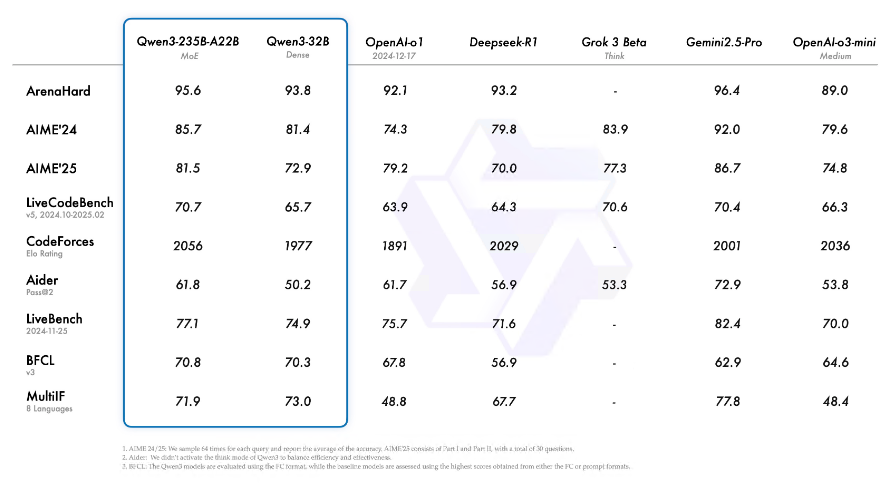

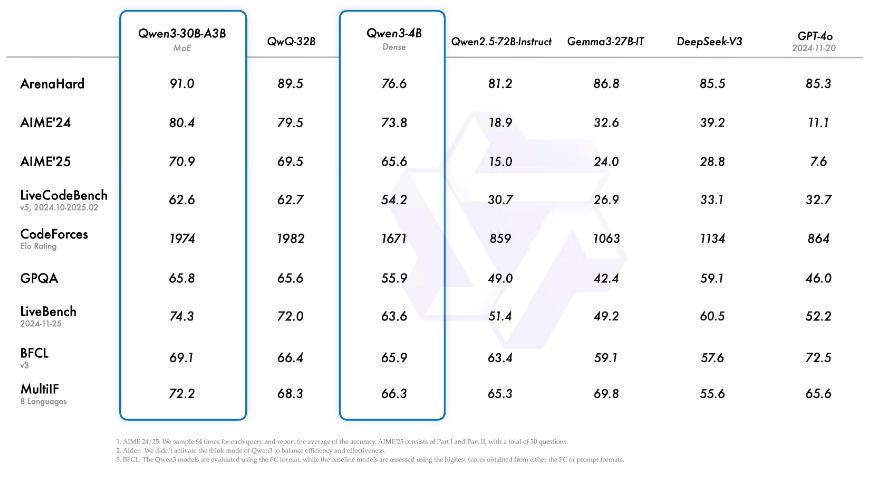

📊 Benchmark Performance

Qwen 3 models outperform many top-tier LLMs across a range of benchmarks:

💡 Emerging Use Cases

Qwen 3’s versatility opens doors across industries:

🧑🏫 Education

Multilingual tutoring bots

Math and logic problem solvers with step-by-step reasoning

🧑💻 Software Development

Code generation and debugging via LiveCodeBench

Function-calling for autonomous agents

🧳 Enterprise AI

Customer support in 100+ languages

Document summarization and long-context analysis (up to 128K tokens)

🤖 Robotics & IoT

On-device inference with small models (e.g., Qwen3-0.6B)

Smart glasses and autonomous vehicle integration

🛠️ Deployment & Fine-Tuning

Alibaba Cloud’s Platform for AI (PAI) supports:

SGLang and vLLM for accelerated deployment

Ollama, LMStudio, and llama.cpp for local use

Fine-tuning via Hugging Face, ModelScope, and Kaggle

🧭 Strategic Impact

Qwen 3 is not just a technical upgrade—it’s a strategic move by Alibaba Cloud to:

Democratize AI through open-source

Compete with GPT-4, Gemini, and Claude in global markets

Empower startups, researchers, and enterprises with modular, multilingual AI